In this article, we would try to highlight some of the technical, business and industrial aspects of Big Data

Introduction

It flows like flood and overwhelms everything that comes in its path. Is it torrential flood, cloud burst or tsunami? No, it is “BIG DATA” the latest buzz in the technology industry. Every day, we create about 2.5 quintillion (2.5×1018) bytes of data, 90% of this data has been produced in the last two years alone. According to some estimates:

- Twitter has 400 million tweets per day or approximately 90MB/sec of data created, June 2012.

- 901 million monthly active users, More than 125 billion friend connections on Facebook at the end of March 2012.

- Facebook collects an average of 15TB of data every day or 5000+ TB per year, and has more than 30PB in one cluster March 2011.

- There are 160 million blogs currently.

- Goggle has more than 50 billion pages in its index (December 2011).

- YouTube has 3 billion visitors per day, 48 hours of video is uploaded per minute (May 2011).

- Amazon’s S3 cloud service had some 262 billion objects at the end of 2010, with approximately 200,000 requests per second.

Well, what happens to all this data? Are they only meant to be stored in data centers around the world? The answer is no, over the last one decade, institutions have been working to innovate, introduce and practice techniques to handle this magnanimous amount of data. Their aim is to leverage the huge trove of unstructured/semi-structured data for a more meaningful purpose, to extract value out of it and drive goals.

The term “Big Data” was probably first introduced in the paper ‘Visually exploring gigabyte data sets in real time’ in 1999. Lately, it has been used to describe a huge set of data as we discussed above. Some critics say that it is a purely marketing term used by big companies but we feel it has a much wider ramification to our industry as a whole. Oreilly says it is the latest “buzzword” of IT in 2012.

In this article, we would try to highlight some of the technical, business and industrial aspects of Big- Data. We would also try to throw some light on the definition, importance, pros and cons, technologies, three V’s and future of Big Data.

What is “Big?”How Much is “Big”?

Data in a form which cannot be represented in databases are known as Unstructured/Semi-structured data. A collection of a huge set of such data which conventional software is unable to capture, manage and process in a stipulated amount of time is known as “BIG DATA”. It is not an exact term. It is characterized by accumulation of exponential unstructured data. It describes data sets which are large and raw which conventional relational databases are unable to analyze.

Now ‘how much is BIG’, it is a moving target size which is increasing as the day passes. Currently in 2012, it is represented by few dozen terabytes to many petabytes of data in a single data set [2]. We think it also depends on the context in which it is used. For example, size of sets would vary if we compare astronomical data with data collected from an online feedback.

Notwithstanding the fact that the data itself is overwhelming, the magnitude and complexity of extracting information out of it and making sense of it is “big” too. Scientists all round the world are looking for answers to solve these complexities. The best example of that is http://amplab.cs.berkeley.edu/.

“Big” Growth

Mobile devices, remote sensing technologies, software logs, cameras, microphones, radio-frequency identification, wireless sensors, weather satellites and sensors, scientific experiments, social networks, internet text and documents, Internet search indexing, call detail records, astronomy, atmospheric science, genomics, biogeochemical, biological and other complex and often interdisciplinary scientific research, military surveillance, medical records, photography archives, video archives, and large-scale e-commerce, all contribute. As more and more sensors, mobile devices, cameras, etc are added into the network/system as more number of people share photos, music etc, as more number of netizens join social networks the size increases. A few examples of some systems and the amount of data they generate:

CERN: The Large Hadron Collider project in CERN produced 22PB of data this year after accepting only 1% of the data produced which is about 100MB per sec.

FLICKR: More than 4 billion queries per day, ~35M photos in squid cache (total), ~2M photos in squid’s RAM, ~470M photos, 4 or 5 sizes of each, 2 PB raw storage

FACEBOOK: As of July 2011, 750 million worldwide users uploaded approximately 100 terabytes of data every day to the social media platform. Extrapolated against a full year, that’s enough data to manage the U.S. Library of Congress’ entire print collection—3,600 times over [3]

Not only this, but the per-capita capacity to store information is also responsible for this huge data explosion. Data storage was very expensive about quarter of a century back, as prices of storage came down more and more, data got stored and currently as per estimates per-capita capacity to store information has roughly doubled every 40 months since the 1980

Is that the end of the story as far as the source and rational behind the growth of data? No, please include enterprise ‘structured’ data to the list too, which can provide wonderful insights. Metadata, data about data, which is increasing twice as fast as the digital data growth, also adds to the list.

Why Everybody Is Talking About “Big” Data?

First, let’s take a look into some statistics. It appeared about 174000 times in New York Times Technology section, about 11,040 times in news articles in CNET, 75 articles in O’Reilly in last one year. What is it? …… “BIG DATA”

- IBM - http://www-01.ibm.com/software/data/bigdata/

- CISCO - http://www.cisco.com/en/US/solutions/ns340/ns517/ns224/big_data.html

- Oracle - http://www.oracle.com/us/technologies/big-data/index.html

- EMC2 - http://www.emc.com/microsites/bigdata/index.htm

The press can’t stop talking about it, all the leading newspapers are discussing it and tech websites have it all over. Why? Let’s delve deeper into the matter.

According to Forrester’s research, only about 5% of the data available to enterprises are effectively utilized as the rest is too difficult to analyze as it is very expensive. A McKinsey Global Institute report claims a retailer embracing big data has the potential to increase its operating margin by more than 60%. As the price of storing data came down and companies began to realize the hidden potential of the same, they started focusing into this to drive business goals, setting future goals, getting customer feedback, etc. They realized that this trove of hidden treasure can also be used for creating business values. This has been corroborated in the report published by WEF wherein it was declared that data is a new class of economic asset, like currency or gold. When used correctly, big data can yield insights to develop, refine or redirect business initiatives; discover operational roadblocks; streamline supply chains; improve operational efficiency; better understand customers; create new revenue streams, differentiated competitive advantage; propose entirely new business models; as well as develop new products, services and business models.

‘What Big Data is seeing now looks like the classic industrial curve. There is the first discovery of something big, leading to establishing principles like scientific rules. Science moves toward engineering as a means to manufacturing, resulting in mass deployment’.

The “Big” Role of Technology …

“Big Data technologies describe a new generation of technologies and architectures, designed to economically extract value from very large volumes of a wide variety of data, by enabling high velocity capture, discovery and/or analysis.” -- IDC.

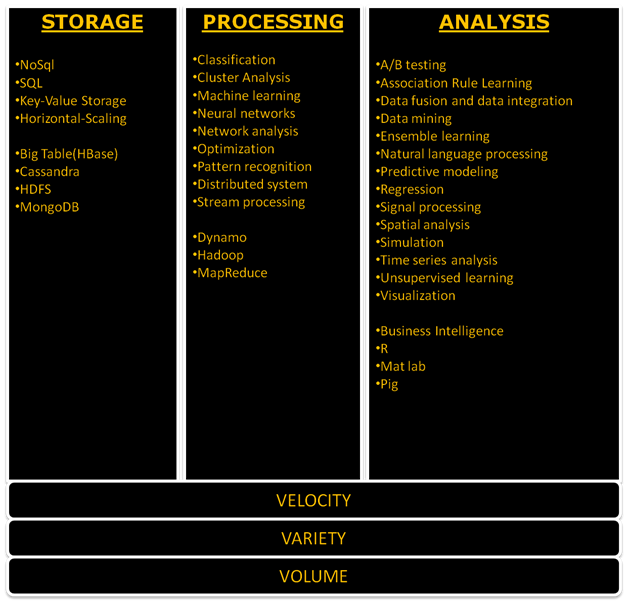

Technology plays a huge role in the “Age of big data”. We would take a detailed look into this in the following section but with a twist, we would try to map the technologies and techniques against the three V’s of “big data”

Volume

‘The more the merrier’ is very applicable for ‘big data’. If one designs a model with 3 factors, it will obviously be less effective than a model with 300 factors. Here is the role of VOLUME in big data. We have discussed in earlier sections huge data are being produced daily but the challenge lies in the fact on how to store and process effectively. The need of the hour was scalable storage and distributed querying. As traditional relational databases were unable to cope with this huge volume of data, massively parallel processing architectures like data warehouses, MPP databases, etc. provided the technology for structured data and HDFS, Big Table, etc. for unstructured data.

Velocity

A person stuck in a jam would not wait for days to get information about the latest traffic situation so that he can take the shortest clear route home. He will need the information instantly. Here is the role of Velocity in big data. The huge data needs to be processed very fast and the analysis needs to be completed quickly, that’s the need of the hour. Currently, these technologies are helping to keep things fast for big data, streaming data or complex event processing and in-memory processing. There are several proprietary and open source tools for this. Examples include MapReduce, S4 and Storm, etc. There is also another factor which we should keep in mind, information retrieval here technologies like NoSQL helps, which are optimized for the fast retrieval of pre-computed information.

Variety

One of the underlying characteristics of big data is the diversity of the data sources which results in various data types. Data is not perfectly ordered and ready for processing. One of the biggest challenges of processing is extracting meaningful information out of it. Here is the role of VARIETY in big data. The first technique used is the SQL-NoSQL integration .The integration of the relational and non-relational world provides the most powerful analytics by bringing together the best of both also it provides storage solutions for various data types. Linked data, semantics are two techniques which have gained some popularity too. NLP plays a role too in Entity Extraction. Statistics plays a big role in flattening out and extracting data sets. The open source statistical language R provides great integration points for several tools and solutions for big data. Apache projects also have a couple of solutions which craters to this space, which along with a couple of proprietary technologies are currently used to solve the problems of variety.

There are two issues which require special mention here ‘security’ and ‘cloud computing’. Security is very important for big data companies. There are two ways it can have adverse effects first by storing information which is not legal to store the company makes itself vulnerable, also accidentally leaking information like credit card details, social security information can cause a lot of damage to the reputation of the company. Secondly, a pure security breach by hackers can cause exposure the entire data set. Technology plays a very crucial role in ensuring security but there is a need to control it more effectively. The advent of cloud computing came as a boon for big data as it became easier/cheaper for smaller companies to utilize big data services in the cloud. Also ‘in the cloud storage’ helped a lot in storing of large amount of data effectively and made it easily accessible.

The bottom line of big data technology is the more process, the better we predict. Larger and wider datasets has the ability to produce more insightful results than smaller data sets.

“Big Values” Created

Education, Physics, Economics, Astronomy, Telecom, Healthcare, Financial Services, Management, Transportation, Digital Media, Retail, Law Enforcement, Energy and Utilities, Social Media, Online Services, Security are some of the domain and areas where “big data” is already creating a lot of value today and the list is increasing day by day. The theme of this section is not to highlight the width of the domains but rather to find out the values created by “big data”. First let’s see what generic values it unlocks. According to Mckinsey:

- Big data can unlock significant value by making information transparent and usable at much higher frequency

- As organizations create and store more transactional data in digital form, they can collect more accurate and detailed performance information on everything from product inventories to sick days, and therefore expose variability and boost performance. Leading companies are using data collection and analysis to conduct controlled experiments to make better management decisions; others are using data for basic low-frequency forecasting to high-frequency nowcasting to adjust their business levers just in time

- Big data allows ever-narrower segmentation of customers and therefore much more precisely tailored products or services

- Sophisticated analytics can substantially improve decision-making

- Big data can be used to improve the development of the next generation of products and services

Now let’s take a few examples from these domains and see how “big data” has added value to them.

Heavy Industrial Machinery

In GE complex and high-volume sensor data is in use for years to monitor and test industrial equipment such as turbines, jet engines, and locomotives. Big Data are used currently to predict performance and maintenance needs of these heavy machines. It also helps to deal with unprecedented down times. It helped GE to use more parameters and data points which were not in use previously.

Retail

Sears was using about 10% of the data produced by its stores and it took eight weeks for them to correctly calculate the “price elasticity”, which is crucial in retail. Enter Hadoop and Big Data techniques Sears is not only able to use 100% of the data it produces, it calculates the “price elasticity” in almost real time. Big Data has helped Sears set more competitive prices and also move inventories according to the current demands.

Medical Science

National Cancer Institute along with UC – Santa Cruz is planning to create the world's largest depository for cancer genomes. They claim that it will be used in "personalized" or "precision" care, whereby the treatment targets specific genetic changes found in an individual patient's cancer cells. It helps them to complete molecular characterization of cancer which will be of immense help. The entire setup runs with the help of “Big Data” techniques.

Is the Future “Big”?

Well, there are skeptics who call it as a bubble and there are forecasts which claim a good healthy future. We will look into the brighter side of it in this section. According to IDC press release on 7th March 2012 ‘a worldwide Big Data technology and services forecast showing the market is expected to grow from $3.2 billion in 2010 to $16.9 billion in 2015. This represents a compound annual growth rate (CAGR) of 40% or about 7 times that of the overall information and communications technology (ICT) market.

Forbes claim that ‘Big Data is the most exciting sector in IT because these new approaches to data management, business analytics and application development are enabling new, game-changing business models. And we are at just the beginning of this new wave of innovation’ [8] Even as we write this, a complete new ecosystem is coming up to meet this new reality head on. Companies that collect data, aggregate data, mine data for insights, store data are all the part of this ecosystem. The shape of which is currently unclear but it is shaping up nicely for sure.

The future of “big data” from a business perspective looks exiting, so let’s take a look at what technology has in store for big data. The classic problem of “big data” is the ability to assemble and preparing it for analysis. The multitude of systems leaving digital traces is storing data in different formats. Assembling, standardizing, normalizing, cleaning up and selecting the crème-de-la-crème data for analysis is the crux of the problem. This is currently handled by Hadoop and other advancement of technologies such as high-speed data analysis, in memory processing, etc. The challenges which remain are processing huge data very quickly; defining a platform; make the technology much more accessible; removing the complexities and making the data more secure. The speed of data processing will be enhanced once the in-memory technologies, etc. evolve. The next challenge would be create a high availability platform which would take out the complexities of processing and analyzing the huge amount of data. The creation of the platform would involve developing tools for quick digestion of huge amount of data and extracting the juice, the process should be made simpler too so that a person who is a layman in technology can also perform the task. The techniques of effectively using the pointers post-analysis would also gain attention.

“The future of Big Data is therefore to do for data and analytics what Moore's Law has done for computing hardware, and exponentially increase the speed and value of business intelligence. Whether it is linking geography and retail availability, using patient data to forecast public health trends, or analyzing global climate trends, we live in a world full of data. Effectively harnessing Big Data will give businesses a whole new lens through which to see it.”

Big Data = Big Opportunities

McKinsey estimates that by 2018 in the United States alone, there will be a shortfall of between 140,000 and 190,000 such graduates with “deep analytical talent.” According to a Economic Times estimates, India itself will require a minimum of 1,00,000 data scientists in the next couple of years, in addition to scores of data managers and data analysts, to support the fast emerging Big Data space. The job trends according to a leading job portal are as follows.

So the future looks bright as far as the opportunities are concerned, but there is a need for well trained professionals both in the technology and analytics domains.

What about the “Big” Frown?

Privacy, Effectiveness, Coercive marketing, Social stratification, Discrimination, Security and Regulations are some of terms often used when a critique is commenting about big data. To be honest, these are some of the issues which are being raised in forums and circles, and they are true also to some extent. Let us see how these are pointing fingers at it.

Privacy, as big data deals with multiple sources it is always possible to co-relate information and predict or forecast about a person, something which is very private or sensitive in nature like history, medical conditions, etc. For example, if any agency tries to predict your future by your shopping habits and suggests products for the same, it is encroaching into a territory which it shouldn’t.

How effective is big data currently? This is another question raised by many. It all depends on the raw data used for prediction whether the data was able to provide good pointers. As the hype is, ‘the more the better’, more data are being stored even if the end product is not effective. There is a risk involved here as the data set is huge, there is also every chance of ‘false’ discoveries.

Think of a situation like this – you walk into a store and buy a laptop, immediately the advanced big data systems of the shop suggests to you that you should buy a cover, an anti-virus product and a two year full maintenance support. Well does it not happens nowadays too? Yes and no, currently the executive/system would probably suggest a few items but not by inducing fear as the big data system will probably be letting you know that how many laptops broke or were infected by malware along with that ‘helpful’ suggestion.

In all ages of the human civilization, there always have been societal strata. If policies are getting determined by the data from particular strata of the society, it might have an adverse effect on the others. Also, there is every possibility of one stratum manipulating the results into its favor. It might also play a role in segregating the society more.

Security agencies, insurance agencies, credit card companies are using big data for crime, fraudulent cases, etc. There are a lot of people who are particularly critical of these practices. As there is every chance that a person who has some traits which vaguely matches with that of a criminal is prosecuted, or the credit limit of a section of the workforce is reduced as some forecast has indicated a slowdown in that industry, this is not deserved. These kind of discriminations should be avoided.

Currently, the internet is a free medium for all and with very few or no regulations but with these concerns around there is bound to be some regulations which will come into foray. Now the concern is that with regulations and regulatory bodies in place, there is every chance that the flow of data will be monitored and regulated. This might be not good for the future of big data.

There is also the issue of security; sometimes companies control data which it is not the owner of, now that data might contain sensitive information and it becomes responsibility of the company to protect that. The general complaint is that companies are not serious enough to protect it which is evident from numerous breaches in recent times.

There is a solution for all these issues too; cutting edge technologies when judiciously and effectively used has the power to solve all the issues listed.

References

Conclusion

When I was a student of computer science a few years ago, I was taught the science of predicting the occurrence of an event by looking into some parameters and also the theory that if the number of parameters tends towards infinite the probability will tend to one, or I will be able to predict the occurrence of the event more accurately. Isn’t this the same paradigm of big data with some other factors? The art of predicting based on actions, traits from the past is nothing new. It has been in use for ages, it is just that with advent of technologies, it has become easier and accessible to many in recent times. It has found acceptance in various fields and profits have also been gained. It is always better to know certain things before we take an action, big data helps us here.

History

- 23rd September, 2014: Initial version