Here we show how to implement in Python a vehicle speed trap using object detection via the various computer vision and Deep Learning models, coupled with a procedure for calibrating the physical constants for converting distance and time intervals.

Introduction

Traffic speed detection is big business. Municipalities around the world use it to deter speeders and generate revenue via speeding tickets. But the conventional speed detectors, typically based on RADAR or LIDAR, are very expensive.

This article series shows you how to build a reasonably accurate traffic speed detector using nothing but Deep Learning, and run it on an edge device like a Raspberry Pi.

You are welcome to download code for this series from the TrafficCV Git repository. We are assuming that you are Python and have basic knowledge of AI and neural networks.

In the previous article, we developed a small computer vision framework that enabled running the various computer vision and Deep Learning models, with different parameters, and comparing their performance. In this article, we’ll look at the different ways of measuring vehicle speed and the different Deep Learning models for object detection that can be used in our TrafficCV program.

Combining VASCAR and ENRADD

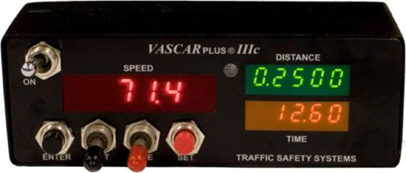

Visual Average Speed Computer And Recorder (VASCAR) is the simplest and most widely used device for vehicle speed detection in areas where speed guns or LIDAR and RADAR detectors are not feasible or prohibited. VASCAR relies on a simple distance/time calculation using the distance between two landmarks and the time a vehicle takes to traverse that distance. Modern VASCAR devices can calculate the speed of a monitored vehicle from a moving patrol car, and can also record video of the monitored vehicle to be used as additional evidence.

Electronic Non-Radar Detection (ENRADD) systems are similar to VASCAR but rely on electronic sensors placed on a roadway. These sensors fire infrared beams that are broken by passing cars, providing an automatic way to measure the time a vehicle takes to traverse the distance between two landmarks.

Our TrafficCV program could be seen as a hybrid of VASCAR and ENRADD as we use computer vision to automatically detect and estimate the speed of vehicles passing through a Region of Interest (ROI). The TrafficCV operator can set different parameters corresponding to physical quantities, such as the length of the ROI in metres.

System Calibration

In his article on OpenCV vehicle speed detection, Adrian Rosebrock describes a simple way to calibrate the speed detector parameters that we can adapt in TrafficCV. Position the camera some distance back from the roadway such that two landmarks correspond to the two extremes of the camera’s horizontal field of view. Divide the camera’s horizontal resolution with the physical distance in metres to yield a constant called the pixels-per-metre, or ppm (Rosebrock uses the inverse of this quantity, or metres-per-pixel, but the principle is the same). In the diagram below, this quantity would be 1280/15, or about 86 for a 1280x720 video resolution.

To improve accuracy without using trigonometry, segment the ROI into 4 (or 8 if the camera resolution is high enough) subregions, and calculate the speed of the vehicle in each region by timing how long the vehicle takes to traverse that segment – 320 (or 160 for 8 subregions) pixels. The average speed across each subregion can give us an estimate of the vehicle’s speed. We can’t use an ordinary timer here because the video is processed at a certain FPS rate - so we use the FPS value instead. If 10 frames have been processed, and FPS=18, we can estimate the time elapsed as 10 / 18 seconds.

Selecting Object Detection Model

TrafficCV can use different computer vision and Deep Learning object detection models running on the CPU or on the Coral USB AI accelerator. We’ve already seen the Haar cascade classifier that runs on the CPU in action in the last two articles. We'll start with the MobileNet SSD class of models.

Single Shot Multibox Detector (SSD) is a deep neural network for object detection that generates bounding boxes and associated confidence scores for multiple detected objects from a single video frame. The early network layers in SSD networks are based on a standard architecture for image classification. MobileNets refer to a base neural network architecture that is efficient on mobile and embedded computing devices. An MobileNet SSD neural network uses the later layers of the SSD network on top of a MobileNet base network, and can perform object detection that is both accurate and efficient on embedded devices like Pi.

We can use an implementation of a MobileNet SSD deep neural network on the TensorFlow Lite runtime, which is optimized for edge devices like Pi, as well as for the Coral USB. The ssd_mobilenet_v1_coco_tflite model is trained on 80 Common Objects in Context (COCO) categories:

tcv EUREKA:0.0 --model ssd_mobilenet_v1_coco_tflite --video ../TrafficCV/demo_videos/sub-1504619634606.mp4

We’re using the model together with another video from our demo_videos directory, which also contains multiple vehicles, to get an idea of the model performance l. This model shows a performance increase over the Haar cascade classifier, with the same or better accuracy, although it suffers from significant slowdown when multiple objects are tracked.

TrafficCV: SSD MobileNet v1 on Windows video

Given the way speed detectors are deployed, tracking multiple vehicles isn’t the only scenario we should look at. Another scenario is with the camera positioned at the side of the road with vehicles passing one-by-one. We can test this scenario using another video in our demo_videos folder:

tcv --model ssd_mobilenet_v1_coco_tflite --video ..demo_videos\cars_horizontal.mp4

The cars_horizontal.mp4 demo video has single vehicles passing horizontally across the camera’s FOV:

TrafficCV: SSD MobileNet v1 on Windows

Running with CPU

We should run this on Windows first to get visual feedback as the video resolution is 1920x1080, and pushing video frames across the network would slow down Pi’s processing considerably. We can see the detector picks up the rapidly moving vehicles, although for smaller cars the detector only picks up the car towards the end of the camera’s FOV. On Pi, we can use the --nowindow parameter when running the detector:

tcv --model ssd_mobilenet_v1_coco_tflite --video ..demo_videos\cars_horizontal.mp4 --nowindow

______ ___ ___ __ ______ ___ ___

|_ _|.----.---.-.' _|.' _|__|.----.| | | |

| | | _| _ | _|| _| || __|| ---| | |

|___| |__| |___._|__| |__| |__||____||______|\_____/

v0.1

11:19:43 AM [INFO] Video window disabled. Press ENTER key to stop.

11:19:43 AM [INFO] Video resolution: 1920x1080 25fps.

11:19:43 AM [INFO] Model: SSD MobileNet v1 neural network using 80 COCO categories on TensorFlow Lite runtime on CPU.

11:19:43 AM [INFO] Using default input mean 127.5

11:19:43 AM [INFO] Using default input stddev 127.5

11:19:43 AM [INFO] ppm argument not specified. Using default value 8.8.

11:19:43 AM [INFO] fc argument not specified. Using default value 10.

11:19:43 AM [INFO] score argument not specified. Using default score threshold 0.6.

11:19:44 AM [INFO] Internal FPS: 10; CPU#1: 12.0%; CPU#2: 16.7%; CPU#3: 34.3%; CPU#4: 86.1%; Objects currently tracked: 0.

11:19:45 AM [INFO] Internal FPS: 11; CPU#1: 19.5%; CPU#2: 23.6%; CPU#3: 10.5%; CPU#4: 100.0%; Objects currently tracked: 0.

11:19:46 AM [INFO] New object detected at (1431, 575, 263, 154) with id 0 and confidence score 0.6796875.

11:19:46 AM [INFO] Internal FPS: 12; CPU#1: 13.7%; CPU#2: 27.2%; CPU#3: 15.0%; CPU#4: 100.0%; Objects currently tracked: 1.

...

11:19:54 AM [INFO] Internal FPS: 12; CPU#1: 8.1%; CPU#2: 14.9%; CPU#3: 11.8%; CPU#4: 100.0%; Objects currently tracked: 0.

11:19:54 AM [INFO] Internal FPS: 12; CPU#1: 20.0%; CPU#2: 17.6%; CPU#3: 10.7%; CPU#4: 100.0%; Objects currently tracked: 0.

11:19:55 AM [INFO] New object detected at (197, 447, 772, 215) with id 1 and confidence score 0.6328125.

11:19:55 AM [INFO] Internal FPS: 12; CPU#1: 9.0%; CPU#2: 17.9%; CPU#3: 21.2%; CPU#4: 100.0%; Objects currently tracked: 1.

11:19:56 AM [INFO] Internal FPS: 12; CPU#1: 8.7%; CPU#2: 12.3%; CPU#3: 12.1%; CPU#4: 100.0%; Objects currently tracked: 1.

On both Windows and Raspberry Pi, the TrafficCV FPS remains constant at 12-13 with one vehicle tracked at a time. This is exactly half of the video’s FPS of 25, so there’s probably a dependency or a bottleneck somewhere that is causing the detector to run at half the video’s FPS, independent of the neural network’s object detection speed or hardware. We’ll investigate this issue in the final article in the series.

Running with Edge TPU

Let’s see how MobileNet SSD runs on the Coral USB AI accelerator using the Edge TPU. The Coral device also uses the TensorFlow Lite runtime so the code for this detector is almost identical to our TFLite detector, except here we use the Edge TPU native library delegates:

EDGETPU_SHARED_LIB = {

'Linux': 'libedgetpu.so.1',

'Darwin': 'libedgetpu.1.dylib',

'Windows': 'edgetpu.dll'

}[platform.system()]

def make_interpreter(self, model_file):

"""Create TensorFlow Lite interpreter for Edge TPU."""

model_file, *device = model_file.split('@')

return tflite.Interpreter(

model_path=model_file,

experimental_delegates=[

tflite.load_delegate(EDGETPU_SHARED_LIB,

{'device': device[0]} if device else {})

])

(cv) allisterb@glitch:~/Projects/TrafficCV $ tcv --model ssd_mobilenet_v1_coco_edgetpu --video demo_videos/cars_horizontal.mp4 --nowindow

_______ ___ ___ __ ______ ___ ___

|_ _|.----.---.-.' _|.' _|__|.----.| | | |

| | | _| _ | _|| _| || __|| ---| | |

|___| |__| |___._|__| |__| |__||____||______|\_____/

v0.1

02:16:54 PM [INFO] Video window disabled. Press ENTER key to stop.

02:16:55 PM [INFO] Video resolution: 1920x1080 25fps.

02:16:55 PM [INFO] Model: SSD MobileNet v1 neural network using 80 COCO categories on TensorFlow Lite runtime on Edge TPU.

02:16:58 PM [INFO] Using default input mean 127.5

02:16:58 PM [INFO] Using default input stddev 127.5

02:16:58 PM [INFO] ppm argument not specified. Using default value 8.8.

02:16:58 PM [INFO] fc argument not specified. Using default value 10.

02:16:58 PM [INFO] score argument not specified. Using default score threshold 0.6.

02:16:59 PM [INFO] Internal FPS: 10; CPU#1: 8.3%; CPU#2: 19.9%; CPU#3: 9.1%; CPU#4: 9.8%; Objects currently tracked: 0.

02:16:59 PM [INFO] Internal FPS: 12; CPU#1: 19.1%; CPU#2: 98.6%; CPU#3: 25.0%; CPU#4: 10.0%; Objects currently tracked: 0.

02:17:00 PM [INFO] Internal FPS: 13; CPU#1: 11.6%; CPU#2: 100.0%; CPU#3: 29.4%; CPU#4: 19.4%; Objects currently tracked: 0.

02:17:07 PM [INFO] Internal FPS: 14; CPU#1: 16.4%; CPU#2: 97.1%; CPU#3: 11.4%; CPU#4: 11.6%; Objects currently tracked: 0.

02:17:08 PM [INFO] Internal FPS: 14; CPU#1: 21.7%; CPU#2: 98.6%; CPU#3: 20.6%; CPU#4: 8.7%; Objects currently tracked: 0.

02:17:08 PM [INFO] New object detected at (156, 482, 789, 180) with id 0 and confidence score 0.625.

02:17:08 PM [INFO] Internal FPS: 14; CPU#1: 27.8%; CPU#2: 98.6%; CPU#3: 10.7%; CPU#4: 11.1%; Objects currently tracked: 1.

As expected, the FPS remains at around 12-13. Interestingly, the Edge TPU model appears to miss the first smaller car that passes quickly through the FOV, compared to the TF Lite version of the model that was able to pick it up just before it moved out of frame. Edge TPU models are "quantized" versions of the existing models that have floating point values converted to fixed 8-bit values. This reduces accuracy in favor of performance. If we set the score threshold detector arg to 0.5, we’ll be able to detect the first car:

tcv --model ssd_mobilenet_v1_coco_edgetpu --video demo_videos/cars_horizontal.mp4 --nowindow --args score=0.5

The lowered score threshold will cause false detections. The other interesting thing is that 1 Pi CPU is pegged at 100% even though the object detector inference is run on the Edge TPU device. This provides a clue to our bottleneck issue - something we’ll address in the next article.

We now have a framework that can run on both Raspberry Pi and Windows, which allows us to easily evaluate traffic speed detection on both CPU and AI accelerator hardware using the different video sources.

Next Step

The final article will discuss improvements we can make to the software in terms of performance or accuracy. We’ll also compare our homebrew open-source system to commercial vehicle speed detection systems.